Automated project reporting made easy for everyone

"Really good looking views and easy setup. Ideal for providing open visibility for the progress across the company."

accessible everywhere

Embed insights anywhere

Share Screenful dashboards, charts, and reports with your team by embedding them in your favorite tools.

complete toolkit

Zero to value in minutes

Streamline your reporting process with Screenful. Say goodbye to manual busywork so you can focus on delivering value.

Screenful enables tracking metrics across tools. Whether your teams are using Jira, Asana, GitHub etc., with Screenful, you can get unified reporting across all of them!

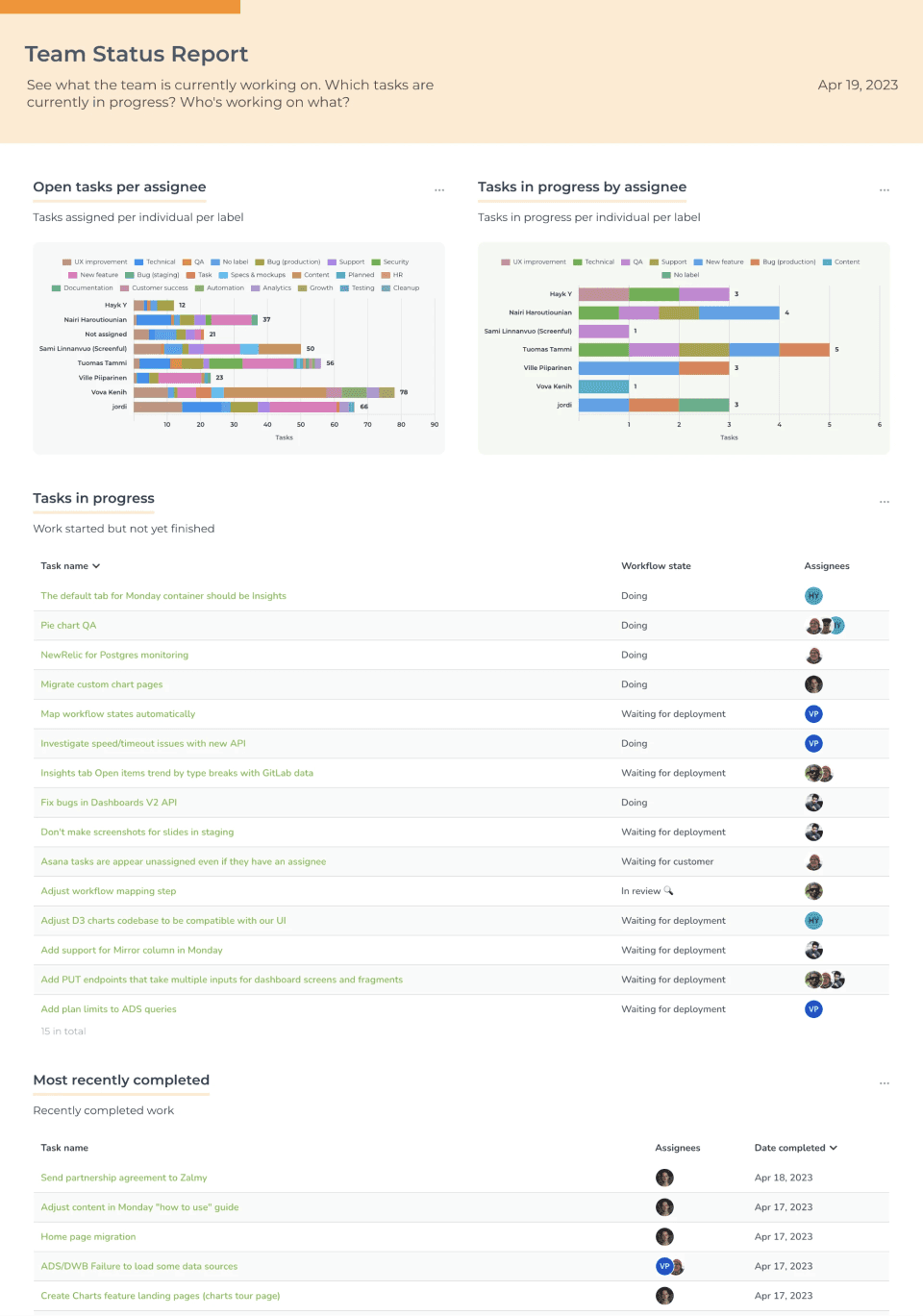

10+ Report templates

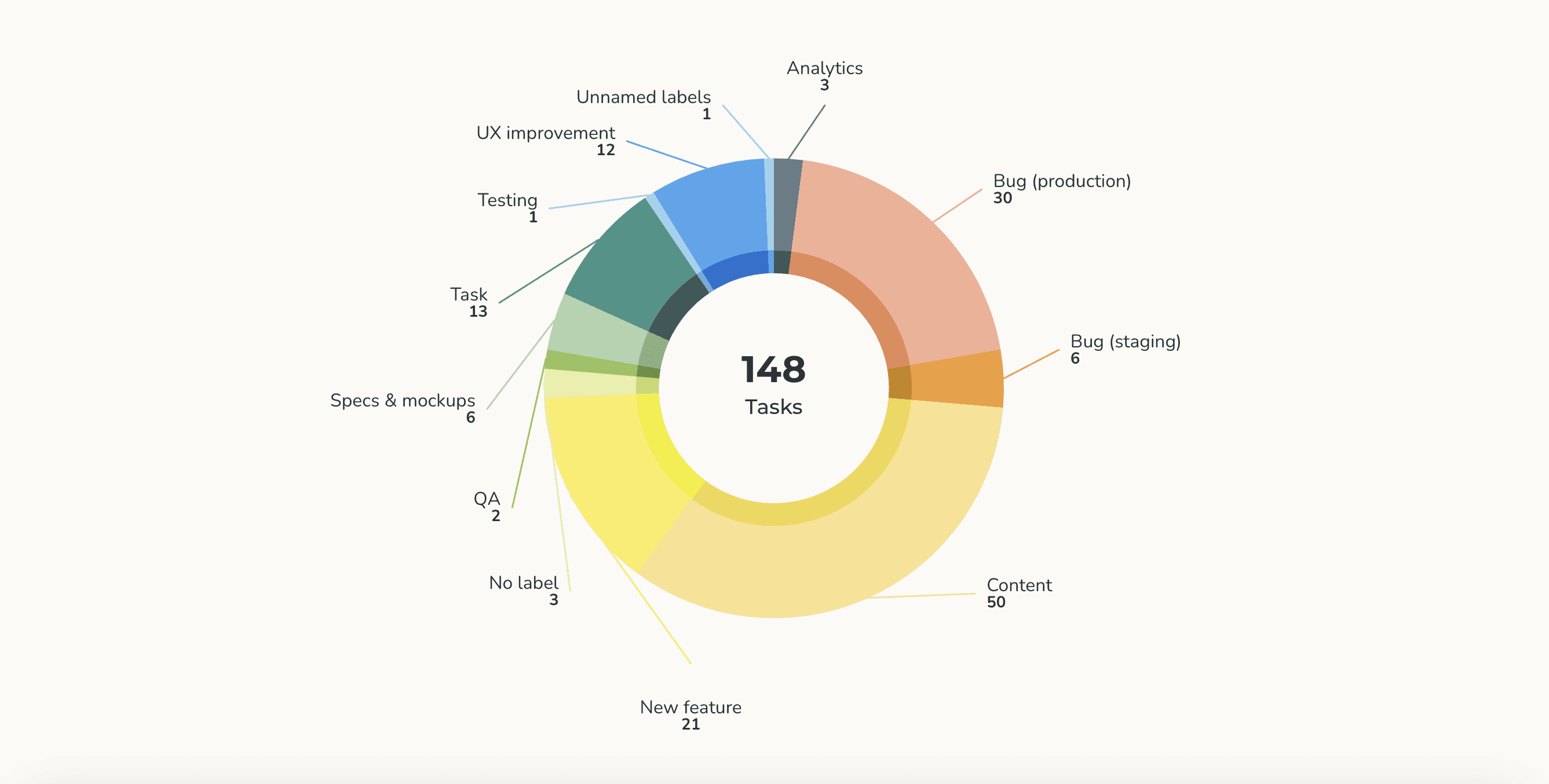

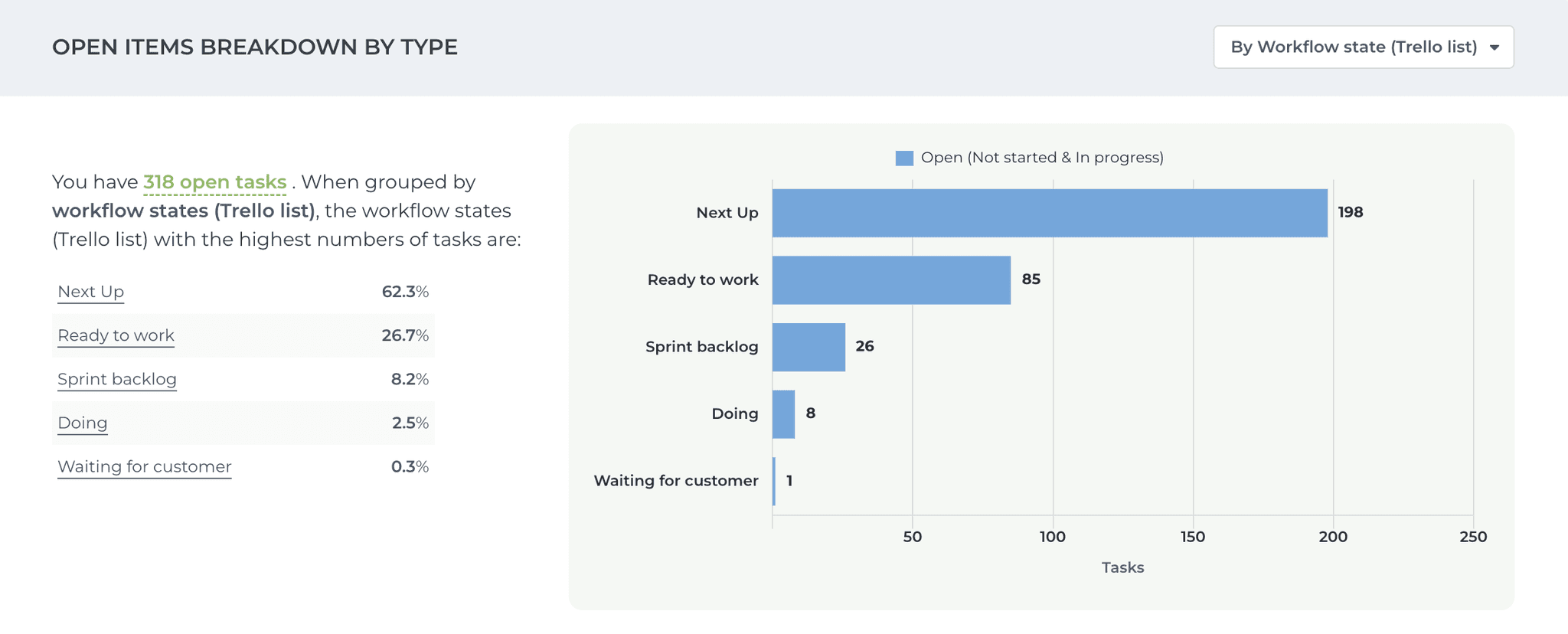

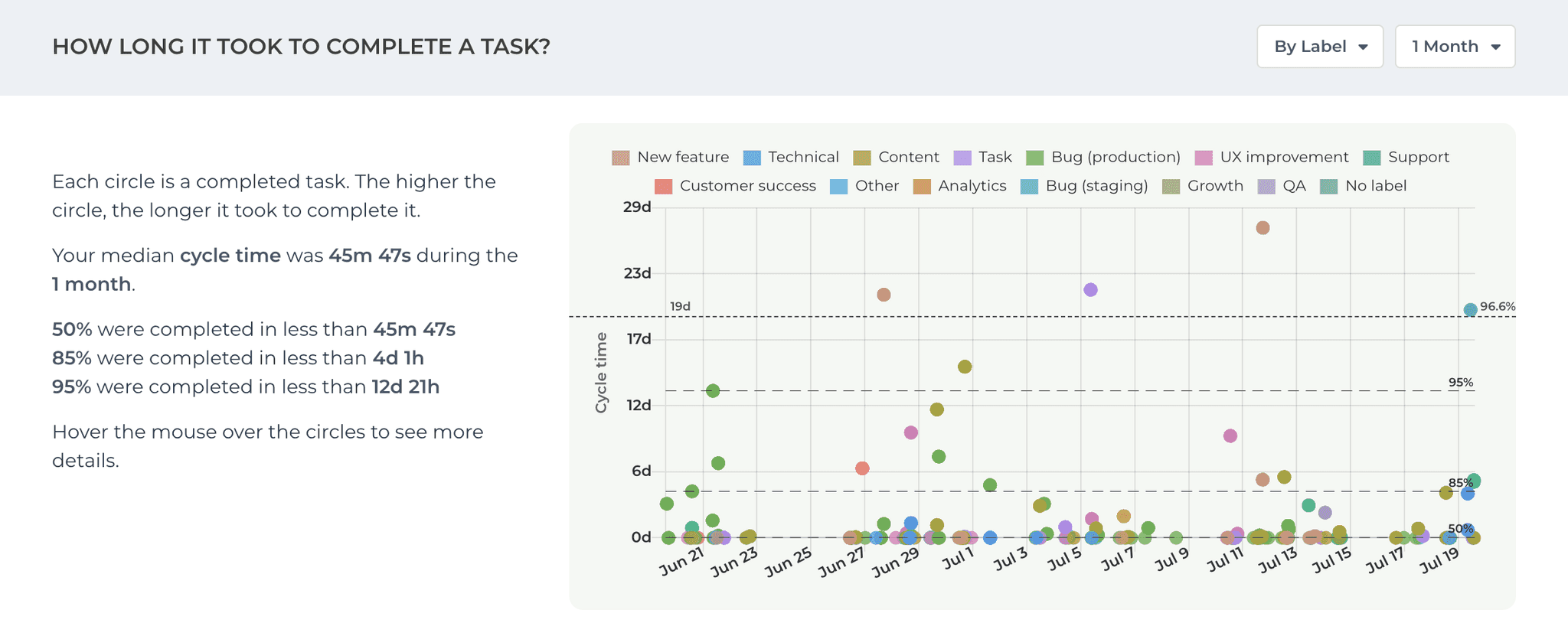

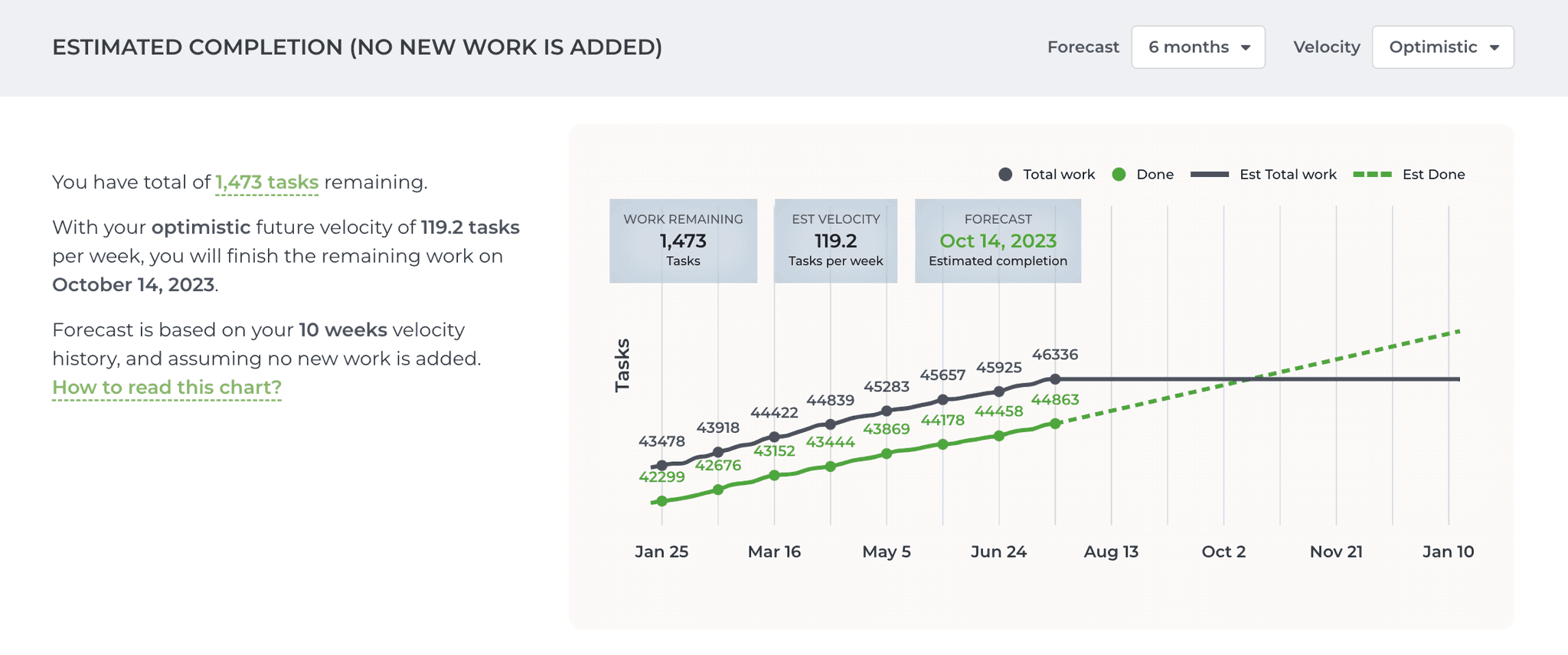

Out-of-the-box insights

Filters and segments

PDF, CSV, and JSON export

Data encryption

30+ Chart templates

Email and Slack integrations

Custom fields

Embed anywhere

product news

🚢 What have we shipped lately?

We ship updates to our SaaS product daily. About once a month, we publish release notes of the new features.